Writing//Posthuman:The Literary Text as Cognitive Assemblage①

N.Katherine Hayles

Abstract:Posthuman studies have developed in many different directions,which may be charted according to the role they assign to computational technologies.Arguing that computational media have been highly influential in the spread of posthumanism and its conceptualizations,this essay illustrates the importance of digital technologies for writing in the contemporary era.Humans and computational media participate in hybrid interactions through cognitive assemblages,networks through which information,interpretations and meanings circulate.Illustrating these interactions are analyses of two works of electronic literature,Sea and Spar Between by Nick Montford and Stephanie Strickland,and Evolution by Johannes Heldén and Håkan Jonson.

Keywords:posthuman; writing; electronic literature; cognitive assemblages

Friedrich Kittler has famously argued that the shift from handwriting to typewriter inscription correlated with a massive shift in how writing,and the voice it seemed to embody,functioned within the discourse networks of 1800 and 1900,respectively(Kittler,Discourse Networks).We are in the midst of another shift,even more consequential than that Kittler discussed,from the electromechanical inscriptions(e.g.,manual and electric typewriters)to writing produced by computational media.If I were to imagine a contemporary sequel,say Discourse Networks 1900/2000,to Kittler’s influential text,it would argue that the issue is now not merely the role of the voice but the status of humans in relation to cognitive technologies.As computational media increasingly interpenetrate human complex systems,forming what I call cognitive assemblages,humans in developed societies such as North America,Europe,Japan and China are undergoing complex social,cultural,economic and biological transformations that mark them as posthumans.

But I am getting ahead of my story,so let us return to the essay’s title for further explication.The double slash refers in part to the punctuation mark found in all URLs,read by computers as indicating that a locator command follows,thus performing the critical move that made the web possible by standardizing formatting codes rather than devices.For an analogy to highlight how important this innovation was,we might think of an imaginary society in which every make of car requires a different fuel.The usefulness of cars is limited by the availability of stations selling the “right” fuel,a logistical nightmare for drivers,car manufacturers,and fuel producers.Then someone(historically,Tim Berners-Lee)has a terrific idea:rather than focus on the cars and allow each manufacturer to determine its fuel requirements,why not standardize the fuel and then require each manufacturer to make the vehicle’s equipment compatible with this fuel.Suddenly cars have increased mobility,able to travel freely without needing to know whether the right stations will be on their route;cars proliferate because they are now much more useful;and traffic explodes exponentially.The double slash references the analogous transformation in global communications and all the pervasive changes it has wrought.

What about the two terms the double slash connects and divides, “writing” and “posthuman”?Since I helped to initiate posthuman studies nearly twenty years ago by publishing the first scholarly monograph on the topic(Hayles,How We Became Posthuman), versions of the posthuman have proliferated enormously.In my book,I argued that a series of information technologies,including cybernetics,artificial intelligence,robotics,artificial life and virtual reality successively undercut traditional assumptions about the liberal humanist subject,breaking open the constellation of traits traditionally considered to constitute human beings and laying the groundwork for new visions to emerge.Evaluating these new configurations,I urged resistance to those that supposed the biological body could or should be abandoned,allowing humans to achieve immortality by uploading consciousness into a computer.The theoretical underpinning for this power fantasy was(and is)a view of information as a disembodied entity that can flow effortlessly between different substrates.Consequently, part of the theoretical challenge that the posthuman poses is to develop different theories of information that connect it with embodiment,context,and hence to meaning.

1.Why Posthumanism//Why Now?

Two decades later,cultural and theoretical trends have led posthuman studies in diverse directions.Particularly noteworthy is the plethora of approaches that see positive roles for the posthuman as antidotes to the imperialistic,rationalistic,and anthropocentric versions of the human that emerged in the Enlightenment.Although a full analysis of these trends is beyond this essay’s scope,contemporary versions of the posthuman can be roughly divided between those that see technological innovations as central,and others that focus on different kinds of forces,structures,and environments to posit the posthuman subject.The catalysts for these versions are diverse,ranging from the huge influence of Gilles Deleuze’s philosophy,to arguments that challenge the anthropocentric privileging of humans over nonhumans and see the posthuman as a way to achieve this,to a growing sense of crisis over the human impact on earth systems and a corresponding desire to re-envision the human in posthuman terms.Of course,these categories are far from airtight,and many positions incorporate elements of several different trends. Nevertheless, the centrality of technology,or lack of it,provides a useful way to group these different versions and locate my own position in relation to them.

On the non-technological side is the“sustainable subject” envisioned by Rose Braidotti(Braidotti, “The Ethics”; The Posthuman).Influenced by Deleuze,Braidotti departs from his philosophy by wanting to salvage a coherent subjectivity,albeit in modified form as an entity open to desires,intensities and lines of flight that Deleuze evokes as he and his co-author Guattari write against the subject, the sign, and the organism.Braidotti urges her readers to become subjects open to flows so intense that they are almost—but not quite—taken to the breaking point, “cracking,but holding it,still” (“The Ethics”139)even as the currents rip away most conventional assumptions about what constitutes the human subject.

Also on this side is Cary Wolfe’s proposal for posthumanism,which he identifies with his original synthesis between Luhmannian systems theory and Derridean deconstruction.Like Braidotti,Wolfe’s version of posthumanism is virtually devoid of technological influence.The central dynamic,as he sees it,is a recursive doubling back of a system on itself,in response to and defense against the alwaysgreater complexity of the surrounding environment.This structural dynamic is essentially transhistorical,demonstrating only a weak version of historical contingency through secondary mechanisms that emerge as the system differentiates into different subsystems as a way to increase its internal complexity.He defines posthumanism in a way that excludes most if not all technological posthumanisms,a breathtakingly arrogant move that does not,of course,keep those who want to include technology from also claiming title to the posthuman.

At the other end of the spectrum are visions of the posthuman that depend almost entirely on future technological advances.Nick Bostrom(2014)speculates on how humans may transform themselves,for example through whole brain emulation,an updated version of the uploading scenario.This not-yetexisting technology would use scanning techniques to create a three-dimensional map of neuronal networks,which would then be uploaded to a computer to re-create the brain’s functional and dynamic capacities.In other scenarios,he imagines using genetic engineering such as CRISPR gene editing to go beyond present-day capabilities of targeting gene-specific diseases such as Huntington’s and leap forward to techniques that address wholegenomic complex traits such as cognitive and behavioral capabilities(45).This could be done,he suggests,by creating multiple embryos and choosing the one with the most desirable genetic characteristics.In an even creepier version of future eugenic interventions,he discusses iterated genome selection,in which gametes are developed from embryonic stem cells,and then that generation is used to develop more gametes,each time selecting the most genetically desirable embryos, thus compressing multiple generations of selection into a single maturation period.Whereas theorists like Braidotti and Wolfe are primarily concerned with ethical and cultural issues,for Bostrom the main focus is on technological possibilities.As the above scenarios suggest,he gives minimal consideration to the ethics of posthuman transformations and its cultural implications.

Also on this end of the spectrum is philosopher David Roden (2014),who asks under what conditions posthuman life (either biological or computational)might be developed,and what the relation of such life would be to humans.He assumes that to count as posthuman,an entity must be radically different from humans as we know them,having emerged as a result of what science fiction writer Vernon Vinge calls the“singularity” (Vinge,“The Coming”), the historical moment when humans invent a life form vastly superior in intelligence and capabilities to their own powers.He concludes,unsurprisingly given his premises,that humans will be unable to control the direction or activities of posthuman life and that it is entirely possible such emergence will mark the end of the human species.While Roden is deeply concerned with ethical issues, he resolutely refuses an anthropocentric perspective and judges posthuman life on its own merits rather than from a human viewpoint(although one may question how this is possible).His version of the posthuman finds many reflections in contemporary novels and films,with the difference that he refuses to speculate on what form posthuman life might take.He argues that a rigorous philosophical evaluation requires that the future be left entirely open-ended,since we cannot know what will arise and any prediction we might make could be wildly off the mark.His stance recalls Yogi Berra’s quip, “ It’s tough to make predictions,especially about the future.”

The problem with all these versions of the posthuman,as I see it,is that they either refuse the influence of technology altogether or else rely on future technologies that do not yet exist.While speculation about an unknown future may have some usefulness,in that it stimulates us to think about present choices, Roden’s carful avoidance of predictions about the forms posthuman life might take means that his analysis is significantly circumscribed in scope.By contrast,my approach is to find a middle way that acknowledges the seminal role computational media play in transforming how we think of human beings,and yet remains grounded in already-existing technologies widely acknowledged to have immensely influenced present societies.

Why should we regard technology as central to the posthuman?While it is possible to suppose that notions of posthumanism may have arisen earlier,say before 1950 when computation really took off,I think such ideas would have remained esoteric and confined to small elites(Nietzsche’s “übermensch”is one such example).Posthumanism only became a central cultural concern when everyday experiences made the idea of a historical rupture not only convincing but obvious,providing the impetus to think of the“human” as something that had become“post.” Computational media have now permeated virtually every aspect of modern technologies,including automobiles,robotic factories,electrical grids,defense systems,communication satellites,and so forth.Their catalyzing and pervasive effects have in my view played a pivotal role in ushering in the“posthuman,” with all of its complexities and diverse articulations.

My own version has been called as“critical posthumanism”(Roden 44-45)and“technological posthumanism”(Cecchetto 63-92).I note that both of these categories tend to elide the differences between my views and those who espouse transhumanism.From my perspective this difference is important,because transhumanism usually implies that humans should seek to transform their biological and cognitive limits,whereas I am much more interested in conditions that already exist and the ways in which they are transforming how “the human” is performed and conceptualized. My writing is directed not to the future or the past but the complexities of the hybrid human-technical systems that constitute the infrastructural necessities of contemporary life.

With its sense of marking some kind of rupture in the human,the posthuman offers opportunities to re-think humans in relation to their built world and to the planetary ecologies in which all worlds,human and nonhuman, are immersed. My contribution toward this effort is articulated in my book Unthought: The Power of the Cognitive Nonconscious. Drawing on recent work in neuroscience,cognitive science and other fields,I develop the idea of the cognitive nonconscious,a mode of neuronal processing inaccessible to consciousness but nevertheless performing functions essential for consciousness to operate.This research invites a reassessment of the role of consciousness in human life in ways that open out onto a much broader sense of cognition that extends beyond the brain into the body and environment.It also enables a comparison between human nonconscious cognition and organisms that do not possess consciousness or even central nervous systems,such as plants.It encourages us to build bridges between the cognitions immanent in all biological lifeforms and technical cognitive media such as computers.The result,I argue, is a framework in which the anthropocentric emphasis on human consciousness is decentered and located within a much deeper,richer,and broader cognitive ecology encompassing the planet.This is the context in which we should understand the pervasiveness and importance to the posthuman of hybrid human-technical systems,which I call cognitive assemblages.

Cognitive assemblages are tighter or looser collections of technical objects and human participants through which cognitions circulate in the form of information,interpretations,and meanings.My use of assemblage overlaps with that of Deleuze and Guattari(A Thousand Plateaus; see also DeLanda,Assemblage Theory),although it has some differences as well.Since Deleuze and Guattari want to deconstruct the idea of pre-existing entities,the assemblage in their view is a constantly mutating collection of ongoing deterritorializations and reterritorializations, subverting the formation of stable identities.By contrast,my idea of cognitive assemblages is entirely consistent with pre-existing entities such as humans and technical systems.My framework also overlaps with Bruno Latour’s Actor-Network-Theory(Latour,Reassembling the Social),but whereas Latour places humans and material forces on the same plane,my emphasis on cognition draws an important distinction between cognitive entities on the one hand and material forces such as tornadoes,hurricanes and tsunamis on the other.The crucial distinguishing features,I argue,are choice and interpretation, activities that all biological lifeforms perform but that material forces do not.Choice and interpretation are also intrinsic to the design of computational media,where choice(or selection) is understood as the interpretation of information rather than anything having to do with free will.

What is cognition,in my view?I define it as“the process of interpreting information in contexts that connect it with meaning.”Although space will not allow me to fully parse this definition here,suffice it to say that I see cognition as a process,not an entity,and moreover as taking place in specific contexts, which strongly connects it with embodiment for biological lifeforms and particular instantiations for computational media. This definition sets a low bar for something to count as cognitive;in Unthought,I argue that all lifeforms have some cognitive capabilities, even plants.Choice(or selection) enters in because it is necessary for interpretation to operate;if there is only one possible selection, any opening for interpretation is automatically foreclosed. The definition hints at a view of meaning very different from the anthropocentric sense it traditionally has as hermeneutic activities performed by humans.Rather,meaning here is oriented toward a pragmatist sense of the consequences of actions,a perspective that applies to cognitive technologies as well as lifeforms and leads to the claim that computers can also generate,interpret,and understanding meanings.

2.Writing Posthuman//Posthuman Writing

Let us turn now to the other term in my title,“writing,” and consider the complex ways it relates to “posthuman.” I wrote my dissertation on a manual typewriter,and lurking somewhere within my synapses is the muscle memory of that activity,including how much key pressure is necessary to make the ink impression on paper dark enough but not so sharp as to tear the paper,and how fast the keys can be hit without having them jam together.There was no mystery about this process;everything was plain and open to view,from the mechanical key linkages to the inked ribbon snaking through the little prongs that held it into place above the paper.Nowadays,other than scribbled grocery lists and greeting card notes,I write entirely on a computer,as do most people in developed countries.

Here,by contrast, mystery abounds. The surface inscription I see on the screen is generated through a complex series of layers of interacting codes,starting at the most basic level with circuits and logic gates, which in turn interact with microcode(hardwired or floating),up to machine code and the instruction set architecture for the machine,which interacts with the system software or operating system,which in turns interfaces with assembly language,on up to high-level languages such as C++,Java and Python,and finally to executable programs such as Microsoft Word.As I write,multiple operations within these levels of code are running to check my spelling,grammar,and sentence structures.In a very real sense,even when I compose alone in a room,I have a whole series of machinic collaborators.If I go online to check definitions,references,and concepts,the circle of collaborators expands exponentially, including humans who contribute to such sites as Wikipedia,computers that generate the code necessary for my queries to travel through cyberspace,and network infrastructures that carry the packets along internet routes.

The mere act of composing anything on a computer enrolls me in cognitive assemblages of fluctuating composition as I invoke various functionalities,programs,and affordances.In this sense my digital writing is always already posthuman.When Iwrite about posthumanism,the condition becomes conceptual as well as technological,for I am immersed in the very environments that I wish to analyze,a systemic recursivity that,aswe have seen,is characteristic of some versions of posthumanism.This is another meaning ofmy title’s double slash,connoting by its repetitive mark the folding back of writing about posthumanism into the posthuman condition of technical//human cognitions circulating within cognitive assemblages.

Among the theorists interrogating this posthuman condition is Dennis Tenen in Plain Text:The Poetics of Computation (2017). “Extant theories of interpretation,” he writes, “evolved under conditions tied to static print media.By contrast,digital texts change dynamically to suit their readers,political contexts,and geographies...I advocate for the development of computational poetics,a strategy of interpretation capable of reaching past surface content to reveal platforms and infrastructures that stage the construction of meaning”(6).He contrasts pen and paper(and I might add to this my un-mysterious mechanical typewriter)with digital writing,where“the bridge between keyboard and screen passes through multiple mediating filters.Writing itself becomes a programmed experience”(14).He refers to digital writing as a “textual laminate,” emphasizing(by analogy with laminated products such as plywood)the multiple layers of code that comprise it.When printed out,the textual laminate is flattened to a two-dimensional sheet,but when computer processes are running,it is a multidimensional network of interactions stretching across the globe.

Motivating Tenen’s concern with digital writing are the “new forms of technological control” (3)embedded in the layers of machinic code,including copyright restrictions that prohibit the user from accessing the deeper layers of proprietary code,much less intervening in them.Therefore,he argues that to“speak truth to power—to retain a civic potential for critique—we must therefore perceive the mechanisms of its codification.Critical theory cannot otherwise endure apart from material contexts of textual production,which today emanate from the fields of computer science and software engineering”(3).Moreover,in cases where using specific software packages such as Adobe Acrobat require assent to conditions of use that he regards as unacceptable, he advocates boycotting them altogether in favor of“plain text,” text written with a simple editing program as Textedit that uses minimal coding instructions.By contrast,with pdfs“the simple act of taking notes becomes a paid feature of the Adobe Acrobat software.What was gained in aminor[...]convenience of formatting is lost in a major concession to the privatization of public knowledge”(194).

This aspect of his project strikes me as analogous to Thoreau’s attempt at Walden to leave civilization behind and live in a “purer” state of nature, uncontaminated by then-contemporary amenities(Thoreau, Walden). This approach reasons that if technological control is a problem,then retreat to practices that abjure it as much as possible.To his credit,Tenen recognizes that such a stance risks inconsistency,because while one can avoid using pdfs(with some effort and inconvenience),one can scarcely make the same kind of decisions regarding,say,water purification plants,the electrical grid,and modern transportation networks and still participate in the contemporary world.Tenen defends his argument that we must understand the laminate text,including even the quantum physics entailed in a computer’s electronics, with the following reasoning.“Why insist on physics when it comes to computers,”he asks rhetorically,when one might drive a car without insisting on knowing exactly how all its components work? He answers,“Computers[...]are dissimilar to cars in that they are epistemic,not just purely instrumental,artifacts.They do not just get us from point A to point B;they augment thought itself,therefore transforming what it means to be human”(51).

While I wholeheartedly agree with his conclusion that computers are epistemic,I am less sure of his assertion about cars,which in their ability to transport us rapidly from one environment to another might also be considered epistemic,in that they alter the world horizons within which we live.The better argument,I think,is to say that Tenen is especially concerned with computers because he writes for a living,and hence writing to him is more than instrumental:it is away of life and a crucial component of how he knows the world and conveys his thoughts to others.

His approach,therefore,has special relevance to literary theory and criticism.One of his central claims is that the underlying layers of code“affect all higher-level interpretive activity”(2),so that literary analysis can no longer remain content with reading surface inscriptions but must plunge into the code layers to discern what operations are occurring there.This concern is reflected within the emerging field of electronic literature in a rich diversity of ways.Here I should clarify that whereas all writing on a computer is necessarily digital,“electronic literature”refers specifically to digital writing that can lay claim to the affordances and resources of literary texts and literature in general.

An analogy with artists’book may be helpful here.Johanna Drucker,in her definitive book on twentieth-century artists’books (Drucker, The Century of Artists’s Books),suggests that the special characteristic of artists’books that distinguish them from books in general is their mission to interrogate the book as an artistic form.What makes a book,and how far can boundaries be stretched and have the object still recognized as “book”?What about booksized marble slab covers incised with letters and no pages,or a book with many different kinds of paper but no ink(Hayles,Writing Machines 74)?Such projects draw our attention to our presuppositions about what a book is and thereby invite meditations on how we think about bookishness.

Similarly,electronic literature asks how far the boundaries of the “literary” may be stretched and still have the work perform as literature,for example in works where words are fused with,and sometimes replaced by,animations,images,sounds,and gestures.How do our ideas about literature change when the text becomes interactive, mutable,intermedial,and transformable by human actions,including even breathing(see for example Kate Pullinger’s iPhone story, “Breathe,” 2018).These questions imply that electronic literature is a special form of digital writing that interrogates the status of writing in the digital age by using the resources of literature,even as it also brings into question what literature is and can be.

3.Post//Code//Human

To see how works of electronic literature encourage users to plunge into the underlying code,I turn to Sea and Spar Between,a collaborative work authored by Nick Montfort,a Ph.D.in computer science who also creates works of generative poetry,and Stephanie Strickland,a prize-winning poet who works in both print and electronic media.The authors chose passages from Melville’s Moby-Dick to combine with Emily Dickinson’s poems; the program’s code combines fragments from the two sources together to create quatrains composed of two couplets, including juxtaposing Melville and Dickinson’s words and even creating compound neologisms with one syllable from Dickinson and the other from Melville.The output is algorithmically determined,although of course the human authors devised the code and constructed the database from which the algorithms draw for their recombinations.The screenic display is a light blue canvas on which the quatrains appear in a darker blue,color tones that metaphorically evoke the ocean with the couplets performing as “fish.”

Locations are defined through “latitude” and“longitude” coordinates, both with 14992383 positions,resulting in about 225 trillion stanzas,roughly the amount,the authors estimate,of fish in the sea.As Stuart Moulthrop points out(Moulthrop and Grigar 35),the numbers are staggering and indicate that the words displayed on a screen,even when set to the farthest zoom-out setting,are only a small portion of the entire conceptual canvas.

The feeling of being “lost at sea” is accentuated by the work’s extreme sensitivity to cursor movements,resulting in a highly “jittery”feel,suggestive of a fish’s rapid and erratic flashings as it swims.It is possible to locate oneself in this sea of words by entering a latitude/longitude position provided in a box at screen bottom.This move will result in the same set of words appearing on screen as were previously displayed at that position.Conceptually,then,the canvas pre-exists in its entirety,even though in practice,the very small portion displayed on the screen at a given time is computed “on the fly,” because to keep this enormous canvas in computer memory all at once would be prohibitively memory-intensive, far exceeding even contemporary processing and storage limits.

Figure 1.Screen shot of Sea and Spar Between,at closest zoom.Image courtesy of Stephanie Strickland,used with permission.

The effect is a kind of technological sublime,as the authors note in one of their comments when they remark that the work signals “an abundance exceeding normal,human scale combined with a dizzying difficulty of orientation.” The authors reinforce the idea of a reader lost at sea in their essay on this work, “Spars of Language Lost at Sea”(Montfort and Strickland 2013).They point out that randomness does not enter into the work until the reader opens it and begins to read.“It is the reader of Sea and Spar Between who is deposited randomly in an ocean of stanza each time she returns to the poem.It is you,reader,who are random”(8).

How does the work invite the reader to plunge into the underlying code?The invitation takes the form of an essay that the authors have embedded within the source code,marked off by double slashes(yet another connotation of my title), the conventional coding mark used to indicate comments(that is,non-executable statements).The essay is entitled“cut to fit the toolspun course,” a phrase generated by the program itself.The comments make

The essay-as-comment functions both to explain clear that human judgments played a large role in text selection,whereas computational power was largely expended on creating the screen display:

//most of the code in Sea and Spar Between is used to manage the

//interface and to draw the stanzas in the browser’s canvas region.Only

//2609 bytes of the code(about 22%)are actually used to combine text

//fragments and generate lines.The remaining 5654 bytes(about 50%)

//deals with the display of the stanzas and with interactivity.

By contrast,the selection of texts was an analog procedure, intuitively guided by the authors’ aesthetic and literary sensibilities.

//The human/analog element involved jointly selecting small samples of

//words from the authors’lexicons and inventing a few ways of generating

//lines.We did this not quantitatively,but based on our long acquaintance

//with the distinguishing textual rhythms and rhetorical gestures of Melville

//and Dickinson.

Figure 2.Screen shot of Sea and Spar Between,taken at medium zoom.Image courtesy of Stephanie Strickland,used with permission.

Figure 3.Screen shot of Sea and Spar Between,farthest out zoom.Image courtesy of Stephanie Strickland,used with permission.

the operation of specific sections of the code and also provides a commentary on the project itself,functioning in this role as literary criticism.The comments,plus the code they explicate,make clear the extent of the computer’s role as a collaborator.The computer knows the display parameters,how to draw the canvas,how to locate points on this twodimensional surface,and how to center a user’s request for a given latitude and longitude.It also knows how to count syllables and what parts of words can combine to form compound words.It knows,the authors comment,how“to generate each type of line,assemble stanzas,draw the lattice of stanzas in the browser,and handle input and other events.”That is,it knows when input from the user has been received and it knows what to do in response to a given input.What it does not know,of course,are the semantic meanings of the words and the literary allusions and connotations evoked by specific combinations of phrases and words.

In reflecting on the larger significance of this collaboration,the(human)authors outline what they see as the user’s involvement as responder and critic.

//Our final claim:the most useful critique

//is a new constitution of elements.On one level,a reconfiguration of a

//source code file to add comments—by the original creator or by a critic—

//accomplishes this task.But in another,and likely more novel,way,

//computational poetics and the code developed out of its practice

//produce a widely distributed new constitution.To the extent that the“new constitution” could not be implemented without the computer’s knowledge,intentions and beliefs,the computer becomes not merely a device to display the results of human creativity but a collaborator in the project.By enticing the user/reader to examine the source code,the authors make clear the nature of the literary text as a cognitive assemblage performed by a humantechnical complex system.By implication,it also evokes the existence of another cognitive assemblage activated when a reader opens the work on her computer and begins to play the work.

4.Evolving//Posthuman

Sea and Spar Between does not invoke any form of artificial intelligence,and differs in this respect from my next example,which does make such an invocation. Montfort and Strickland make this explicit in their comments:

//These rules[governing how the stanzas are created] are simple; there is no elaborate AI architecture

//or learned statistical process at work here.

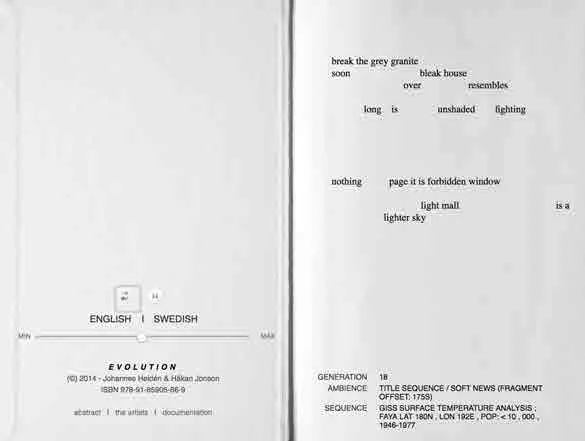

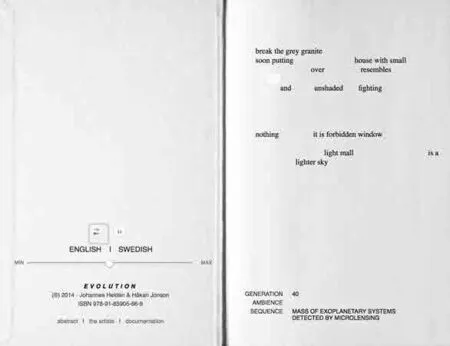

By contrast Evolution,a collaborative work by poet Johannes Heldén and Håkan Jonson,takes the computer’s role one step further,from collaborator to co-creator, or better perhaps poetic rival,programmed to erase and overwrite the words of the Heldén’s original.Heldén is a true polymath,not only writing poetry but also creating visual art,sculpture,and sound art.His books of poetry often contain images,and his exhibitions showcase his work in all these different media. Jonson, a computer programmer by day,also creates visual and sound art,and their collaboration on Evolution reflects the authors’multiple talents.The authors write in a preface that the “ultimate goal” of Evolution is to pass “‘The Imitation Game’ as proposed by Alan Turing in 1951[...]when new poetry that resembles the work of the original author is created or presented through an algorithm,is it possible to make the distinction between ‘author’and ‘programmer’?” (Evolution 2013).

These questions, ontological as well as conceptual,are better understood when framed by the actual workings of the program.In the 2013 version,the authors input into a database all ten of the then-extant print books of Hélden’s poetry.A stochastic model of this textual corpus was created using a statistical model known as a Markov Chain(and the corresponding Markov Decision Process),a discrete state process that moves randomly stepwise through the data,with each next step depending only on the present and not on any previous states.

This was coupled with genetic algorithms that work on an evolutionary model.At each generation,a family of algorithms(“children” of the previous generation) is created by introducing variations through a random “seed.”Randomness is necessary to create the variations upon which selection can work.

All the children in a given generation bear a Wittgensteinian “family resemblance” to one another,having the same basic structure but with minor variations. This generation of algorithms is then evaluated according to some fitness criteria,and one is selected as the most“fit.” In this case,the fitness criteria are based on elements of Heldén’s style;the idea is to select the“child” algorithm whose output most closely matches Heldén’s own poetic practices.Then this algorithm’s output is used to modify the text,either replacing a word(or words)or changing how a block of white space functions,for example,putting a word where there was white space originally(all the white spaces,coded as individual“letters”through their spatial coordinates on the page,are represented in the database as signifying elements,a data representation of Helden’s practice of using white spaces as part of his poetic lexicon).

The interface presents as a opened book,with light grey background and black font.On the left side is a choice between English and Swedish and a slider controlling how fast the text will evolve.On the right side is the text,with words and white spaces arranged as if on a print page.As the user watches,the text changes and evolves;a small white rectangle flashes to indicate spaces undergoing mutation(which might otherwise be invisible if replaced by another space).Each time the program is opened,one of Heldén’s poems is randomly chosen as a starting point,and the display begins after a few hundred iterations have already happened(the authors thought this would be more interesting than starting at the beginning).At the bottom of the“page” the number of the generation is displayed(starting from zero,disregarding previous iterations).

Figure 4.Screen shot of Evolution,generation 18.

Figure 5.Screen shot of same run-through of Evolution,generation 40.

Also displayed is the dataset used to construct the random seed.The dataset changes with each generation,and a total of eighteen different datasets are used, ranging from “mass of exoplanetary systems detected by imaging,” to “GISS surface temperature”for a specific latitude/longitude and range of dates,to“cups of coffee per episode of Twin Peaks”(Evolution[print book],n.p.)These playful selections mix cultural artifacts with terrestrial environmental parameters with astronomical data,suggesting that the evolutionary process can be located within widely varying contexts. The work’s audio, experienced as a continuous and slightly varying drone,is generated in real time from sound pieces that Heldén previously composed.From this dataset,one-minute audio chunks are randomly selected and mixed in using cross-fade,which creates an ambient soundtrack unique for each view(“The Algorithm,” Evolution[print book],n.p.).

The text will continue to evolve as long as the user keeps the screen open,with no necessary end point or teleology,only the continuing replacement of Heldén’s words with those of the algorithm.One could theoretically reach a point where all of Heldén’s original words have been replaced,in which case the program would continue to evolve its own constructions in exactly the same way as it had operated on Heldén’swords/spaces.

In addition to being available online,the work is also represented by a limited edition print book(Evolution[print book]2014),in which all the code is printed out.In this sense,the book performs in a print medium the same gambit we saw in Sea and Spar Between,mixing interpretative essays of literary criticism together with algorithms so that the reader is constantly plunged into the code,even if her intent is simply to read about the work rather than reading the work itself.Moreover,the essays appear on white pages with black ink,whereas the code is displayed on black pages with white ink.The inverse color scheme functions as a material metaphor(a physical artifact whose properties have metaphor resonance;see Hayles,2002 22)that suggests the Janus-like nature of digital writing,in which one side faces the human and the other,the machine.

The essays,labeled as appendices but placed toward the book’s beginning and interspersed with the code sections most germane to how the program works,are brief commentaries by well-known critics of electronic literature, including John Cayley,Maria Engberg, and Jesper Olsson. Cayley(Evolution[print book],2014,n.p.),as if infected by the work’s aesthetic,adopts a style that evolves through restatements with slight variations,thus performing as well as describing his interactions with the work.In Appendix 2: “Breath,” he suggests the work is “an extension of his[Heldén’s]field of poetic life,his articulated breath,manifest as graphically represented linguistic idealities,fragments from poetic compositions,I assume,that were previously composed by privileged human processes proceeding through the mind and body of Heldén and passing out of him in a practice of writing[...] I might be concerned, troubled because I am troubled philosophically by the ontology[...]the problematic being[...]of linguistic artifacts that are generated by compositional process such that they may never actually be—or never be able to be[...]read by any human having the mind and body to read them.” “Mind and body”repeats, as do “ composed/composition,”“troubled,” and “never actually be/never be able,”(ibid.)but each time in a new context that slightly alters the meaning.When Cayley speaks of being“troubled,” he refers to one of the crucial differences in embodiment between human and machine:whereas the human needs to sleep,eat,visit the bathroom, the machine can continue indefinitely,not having the same kind of“mind and body”as human writers or readers.The sense of excess,of exponentially larger processes than human minds and bodies can contain,recalls the excess of Sea and Spar Between and gestures toward the new scales possible when computational media become co-creators.

Maria Engberg, in “Appendix 3:Chance Operations”(Evolution[print book],2014,n.p.)2014) parallels Evolution to the John Cage’s aesthetic,as indicated by her title citing Cage’s famous name for his randomizing algorithms.She quotes from another essay by Cayley(not in this volume)in which he calls for an emphasis on process over object. “‘What if we shift our attention decidedly to practices,processes,procedures—towards ways of writing and reading rather than dwelling on either textual artifacts themselves(even time-based literary objects) or the concept underpinning objects-as-artifacts?’” In Evolution,the code underlying the screenic artifact is itself a series of endless processes,displacing,mutating,evolving,so the distinction between artifact and process becomes blurred, if not altogether deconstructed.

Jesper Olsson,in“Appendix 4:We Have to Trust the Machine”(Evolution[print book],2014,n.p.) also sees an analogy in Cage’s work,commenting that the work “was not the poet expressing himself.He was at best a medium for something else.” This“something else” is of course the machinic intelligence struggling to enact evolutionary processes so that it can write like Heldén,albeit without the “mind and body” that produced the poetry in the first place.A disturbing analogy comes to mind:H.G.Wells’The Island of Doctor Moreau and the half-human beasts who keep asking, “Are we not men?” In the contemporary world,the porous borderline is not between human/animal but human/machine.Olsson sees“this way of setting up rules,coding writing programs” as“an attempt to align the subject with the world,to negotiate the differences and similarities between ourselves and the objects with which we co-exist.”(ibid.)

As I have argued here and elsewhere,machine intelligence has so completely penetrated complex human systems that it has become our“natureculture,” Jonas Ingvarsson’s neologism(