A residual-based message passing algorithm for constraint satisfaction problems

Chun-Yan Zhao, Yan-Rong Fu and Jin-Hua Zhao

1 College of Science, University of Shanghai for Science and Technology, Shanghai 200093, China

2 Guangdong Provincial Key Laboratory of Nuclear Science, Institute of Quantum Matter, South China Normal University, Guangzhou 510006, China

3 Guangdong-Hong Kong Joint Laboratory of Quantum Matter, Southern Nuclear Science Computing Center, South China Normal University, Guangzhou 510006, China

Abstract Message passing algorithms, whose iterative nature captures complicated interactions among interconnected variables in complex systems and extracts information from the fixed point of iterated messages,provide a powerful toolkit in tackling hard computational tasks in optimization,inference,and learning problems.In the context of constraint satisfaction problems (CSPs), when a control parameter (such as constraint density) is tuned, multiple threshold phenomena emerge, signaling fundamental structural transitions in their solution space.Finding solutions around these transition points is exceedingly challenging for algorithm design, where message passing algorithms suffer from a large message fluctuation far from convergence.Here we introduce a residual-based updating step into message passing algorithms, in which messages with large variation between consecutive steps are given high priority in the updating process.For the specific example of model RB(revised B),a typical prototype of random CSPs with growing domains,we show that our algorithm improves the convergence of message updating and increases the success probability in finding solutions around the satisfiability threshold with a low computational cost.Our approach to message passing algorithms should be of value for exploring their power in developing algorithms to find ground-state solutions and understand the detailed structure of solution space of hard optimization problems.

Keywords:constraint satisfaction problems,model RB,message passing algorithms,residuals of messages

1.Introduction

The difficulty in understanding complex systems manifests not only in finding a proper description of their interaction topology, but also in quantifying the collective and cooperative behaviors among their constituents at multiple scales[1].Message passing algorithms prove to be an efficient tool in tackling hard computational problems formulated in graphical models [2], which is a simple description of complex interacted systems.In a typical algorithmic procedure, one defines messages on the connections of basic units in a graphical representation, derives iterative equations of messages to account for the propagation of correlations among variables, and extracts information on problem solutions through messages after a sufficiently large number of updating steps.Among the main roots of the message passing algorithms are coding theory in information theory research [3] and statistical physics of disordered systems [4].Message passing algorithms have been heavily explored in optimization problems[5,6],inference problems[7],learning problems[8,9],and so on.Different frameworks of message passing algorithms are based on mean-field theory at a certain level of approximation, typical examples of which include the belief propagation (BP) algorithm [10], the survey propagation algorithm[11],the cluster variational algorithm[12,13],and so on.

Constraint satisfaction problems (CSPs) [5] are among the most typical computational tasks in complex systems research.A typical instance of CSPs can be formulated as a bipartite graph with two types of nodes denoting m constraints and n variables respectively and edges only between constraints and variables.In a random setting, edges can be established from a null graph with only nodes by connecting a given constraint node with multiple variable nodes chosen among the variable node set with a uniform probability.A basic computational question for CSPs is to find the existence and the number of assignments to variables which satisfy all the constraints in a given instance.CSPs are heavily discussed in computational complexity theory in theoretical computer science [5], as many prototype NP-hard problems can be formulated as CSPs.In the language of statistical physics,satisfiability and optimization problems can be understood as the energy landscapes of configurations of a physical system with continuous or discrete states, while their global optima correspond to the ground-state energy of landscapes.Thus we can adopt statistical mechanical frameworks, such as the mean-field theory of spin glasses [4], to estimate the energy and the entropy of their ground-state solutions.Typical CSPs studied with the spin glass theory are the random k-satisfiability (k-SAT) problem [14, 15], the minimum vertex cover problem [16, 17], and the q-coloring problem [18].These problems focus on random CSPs with fixed domains, whose size of variables is independent of the variable number n(that is,2,2,and q as given integers,respectively).However,many practical problems, such as the Latin square problem, the nqueens problem and so on, can be transformed into random CSPs with growing domains.Among the models for these CSPs, model RB (revised B) proposed in [19] is a popular one,whose domain size grows polynomially with the variable number n.

Spin glass mean-field theory on satisfiability problems shows that fundamental structural transitions exist in the geometric organization of ground-state configurations.Taking the random k-SAT (k ≥3) problem [14] as an example, with an increase in constraint densities (ratio of constraint number to variable number), there are clustering, condensation, and SAT/UNSAT phase transitions separated by multiple thresholds,where drastic changes in the numbers and sizes of the clusters of ground-state configurations take place.Furthermore, there is a deep connection between these solution space phase transitions and the algorithmic performance in finding solutions.Specifically, around these thresholds,messages in mean-field theory often show large fluctuations,rendering impossible the convergence of messages from which a typical message passing algorithm can extract information.Meanwhile, each mean-field theory has an approximation at some level in the organization of the solution space.For example, for the BP algorithm [10], solutions are assumed to be organized in a single cluster,or a pure state.When a level of approximation fails, there are often two choices: whether we generalize the current mean-field assumption to a theoretical framework with a more complicated and computationally demanding form, or we introduce modifications at an algorithmic level to achieve better performance by leveraging the existing form of message passing algorithm.

Our focus in this paper is to suppress the fluctuation of iterative messages around thresholds, a critical problem for message-passing-based algorithms, thus to improve the performance of existing BP algorithm for solving CSPs at the algorithmic level.Our main contribution here is to define residuals in the message updating process, based on which the existing BP algorithm is further modified.We show that,for a specific CSP model RB, compared with its usual BP algorithm, our modification of the algorithm can significantly improve the convergence of the updated messages and increase the probability of finding ground-state solutions around the satisfiability thresholds at a lower computational cost.

The layout of the paper is as follows.In Section 2, we briefly introduce the concept and some known results of model RB, which is a typical CSP with growing domains.Section 3 presents the maximal residual BP (MRBP) algorithm, which modifies the current BP algorithm based on residuals in the process of updating messages.In Section 4, we illustrate and analyze the numerical results of our algorithm.In Section 5,we conclude the paper with some discussions.

2.Model RB: an example of constraint satisfaction problems

Over the past few decades,studies on CSPs have mainly focused on the initial standard CSP models, named A, B, C and D[20—22].However, it has been proven by Achlioptas et al that the random instances generated by the four standard models have trivial asymptotic insolubility with an increase in problem size [23].Therefore, in order to overcome this defect, various improved models from different perspectives in numerous efforts have been proposed successively[19,24—27].Model RB,proposed by Xu and Li to avoid the trivial unsatisfiability of model B[19],is a modification on the domain size of variables and the number of constraints of model B without special restrictions on the constraint structure.

Model RB consists of a set of n variables X={x1,x2,…,xn} and a set of m constraints C={C1, C2, … , Cm} withm=rnlnn,where r >0 is a constant.Each variable xi(i=1,2, … , n) has a nonempty domain D={1, 2, … , dn} of dn=nα(α >0 is a constant)possible values.Each constraint Ca(a=1,2,…,m)involves k(≥2)different variables,and there is a set of incompatible assignments Qa⊂Dkwhich specifies the disallowable combinations of values for the k variables.More precisely, we generate a random instance of model RB with the following two steps.

Step 1.To construct a single constraint,we randomly select k (≥2) distinct variables out of the n variables, and then randomly select exactly(p is the constraint tightness)different incompatible assignments out ofpossible ones as the set Qato restrict the values of these k variables.

Step 2.We repeat the above step to obtainm=rnlnnindependent constraints and their corresponding sets Qa(a=1, 2, …,m).

A random instance of model RB is simply the conjunction of constructing solutions for random CSPs [37, 38].Zhao et al proposed two different types of message passing algorithms guided by BP to solve random CSPs with large domains like model RB[39].Subsequently,Zhao et al proposed a reinforced BP algorithm,which can effectively force the BP equations to converge to a solution of model RB by introducing an external field with a certain probability [40].Moreover, it was shown that the solutions of model RB are grouped in disconnected clusters before the theoretical satisfaibility phase transition threshold[40].Recently,Zhao et al proposed three kinds of BP decimation algorithms,which improved the performance of BP algorithms by improving the updating method of the BP iterative equation [41].the above m randomly selected constraints.

Let Pr(SAT) be the probability that a random instance is satisfied.It is shown in [19] that

The two theorems show that model RB exhibits a sharp threshold of satisfiability at the critical values psand rs, as p and r are two control parameters of the problem.Nevertheless,we cannot rigorously determine the exact value of the satisfiability threshold for some random CSPs with fixed domains[28,29].It has been theoretically proven that almost all instances of model RB have no tree-like resolution proofs of less than exponential size [30].In other words, these random instances of model RB in the transition region are extremely difficult to solve.Numerical results,using complete and incomplete search algorithms, have confirmed the exact satisfiability thresholds and the hardness of forced and unforced satisfiable instances [31].Although model RB shows an asymptotic phase transition phenomenon, it is still very challenging to find solutions of a random instance, and the size of the problem has been restricted to n ~100 [32].Therefore,benchmarks based on model RB are widely used in various kinds of algorithm competitions such as CSP, SAT,pseudo-Boolean and so on,and these results have verified the intrinsic computational hardness of these benchmarks.

Both analytical and algorithmic studies have been carried out on model RB.On the analytical side, Fan and Shen defined a large class of random CSP models d-k-CSP which unified several related models including model RB [33, 34].Later, it was proven that the restriction on k can be weaker,which can simplify the generation of random instances [35].In order to study the transition behaviors of model RB due to finite-size effects, Zhao et al used finite-size scaling analysis to bound the width of the transition region [36].On the algorithmic side, statistical physics concepts and methods,especially the replica method and the cavity method in spin glass mean-field theory,have played a very significant role in

3.Maximal residual belief propagation algorith m

Here we propose the MRBP algorithm, which combines modifications in the updating process based on residuals with the usual BP algorithm.We first lay down the general procedure of the BP algorithm and the relevant equations, and then we define the residuals of messages in the updating process and present our algorithm in detail.

For k ≥2,model RB is NP-complete.Therefore,we take the binary case (k=2) of model RB as the tested problem.Model RB with k ≥3 can be reduced to the case of k=2 in polynomial time.

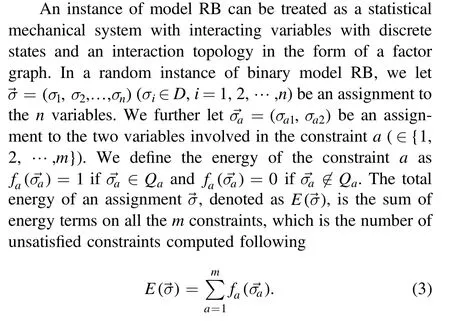

3.1.Belief propagation algorithm

An instance of binary model RB admits a natural factor graph representation,as shown in figure 1.A factor graph is a bipartite undirected graph, in which one type of nodes represents the variables (denoted by i, j, …) and the other types of nodes represent the constraints(denoted by a,b,…).An edge(a,i)connects a constraint node a and a variable node i if and only if a contains i.The average degree of each variable node in the graph isrlnn.Locally such a graph is tree-like; the typical size of a loop in the graph scales like lnn/lnlnnfor large n.

Figure 1.Part of a factor graph representing a random instance of binary model RB.Circles and squares denote the variable nodes and the constraint nodes, respectively.In the message passing algorithm of model RB,μa→i(σi)and ηi→a(σi)are the messages passed between a and i along the edge (a,i) in opposite directions.

Based on the locally tree-like property of the factor graph representation of model RB, we derive the framework of the BP algorithm [4].Associated with each edge (a, i)there are two cavity messages μa→i(σi) and ηi→a(σi) passing in opposite directions.See figure 1 for an example.Specifically, μa→i(σi) is the probability that the constraint a is satisfied if the variable i takes the value σi,and ηi→a(σi)is the probability that the variable i takes the value σiin the absence of the constraint a.Letanddenote the set of messages passed along all the edges in the factor graph at time t = (0, 1,…).According to the cavity method in disordered systems of statistical physics [4, 39], the selfconsistent BP equations of cavity messages can be derived as

Here Zi→aand Za→iare both normalization factors, V(i)a the set of constraints adjacent to the variable i removing a, and V(a)i the set of variables adjacent to the constraint a removing i.

In a typical procedure of the BP algorithm, messages on the edges in the factor graph of a given problem instance are uniformly initialized at random in [0, 1].A message iteration process is carried out, in which messages are updated following the self-consistent equations (4) and (5).After a sufficiently long iterative process,the messages may converge to a fixed point.The convergence criterion can be measured by a precision parameter ε (≪1): when the maximal absolute difference between two consecutive steps of the messages on all edges of a factor graph is smaller than ε, we can say that the message updating process converges.With the fixed point of messages, the statistical mechanical properties of model RB in an average sense can be extracted, such as the energy and entropy of ground-state solutions, and so on.

To find a solution configuration of a given problem instance,we adopt the BP decimation(BPD)algorithm,which assigns values to variables one by one based on the marginal probability after message updating.In order to find a solution configuration of the problem,we can assign the value of each variable based on its marginal probability distribution.This is the basic procedure of the BPD algorithm.With the fixed point of messagesfor all edges (a, i), the marginal probability of a variable σi(i=1,2,…,n)is computed following

In a decimation step, the variable with the largest marginal probability is selected to be assigned to its corresponding most biased component.In the decimation procedure,we iteratively switch between a variable fixing process and a message updating process after a graph simplification process upon fixing variables.

3.2.Maximal residual belief propagation algorithm

The convergence of messages in the BP iterative equations affects the performance of the algorithm.However, the BP equations usually do not converge when the control parameter approaches the theoretical satisfiability threshold.The core idea of this work is the introduction of the residuals for messages.We modify the message updating process, as we dynamically update the passing messages between the constraints and the variables based on the maximal residual in BP iterative equations, to improve the convergence and the performance of BP algorithm.The residuals of messages measure the relative difference sent from constraints to variables between two consecutive steps during the iteration of messages.If we consider all the messages in the BP algorithm as a time series arewith t=0, 1, …, the residual on an edge (a,i) at time t is defined as

A large residual indicates a large fluctuation of the messagethus the messages at these steps are far from the value after convergence of the whole message passing algorithm, if there is one.

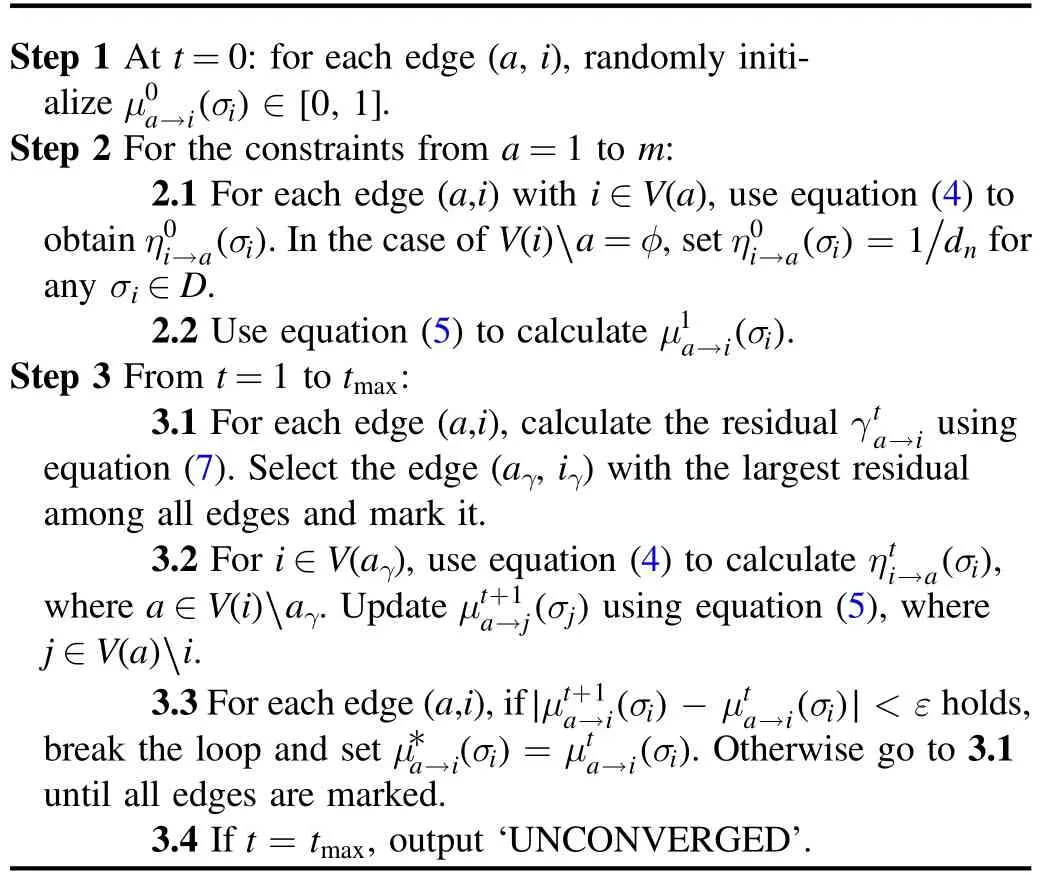

Based on the residual on each edge at a time step, the MRBP algorithm dynamically adjusts the order of updating messages according to the real-time status of the messages,in which the messages connected to the edge with the largest residual are prioritized to update.See figure 2 for an example.At time t,we select the edge(aγ,iγ)with the largest residual.We first adopt equation (4) to compute the messages sent from the variables iγand jγassociated with the constraint node aγto the neighboring constraint nodes connected to iγand jγexcept aγ(denoted by V(iγ)∪V(jγ)aγ),as indexed by the number 1 in figure 2.We need to reduce the greediness of the algorithm to achieve better performance.Hence, the updating messages are considered from the point of view of node aγrather than edge (aγ, iγ).Therefore, we use equation (4) to calculate the messages sent from the nodes iγand jγconnected to aγto V(iγ)∪V(jγ)aγsimultaneously.Then,we follow equation(5)to update the messages that the constraint nodes V(iγ)∪V(jγ)aγsend to the variables connected to the constraints except iγand jγ, as indexed by the number 2 in figure 2.In order to avoid falling into the local optimum in the iteration process, we mark the edge with the largest residual after the message updating, and the residuals on unmarked edges are recalculated.The selection of the current edge with the largest residual and the recalculation of residuals on unmarked edges are carried out iteratively until all edges are marked to ensure that messages on all edges are updated during the BP iteration process.In this way, the messages between constraints and variables are constantly updated till they converge to a fixed point or the maximal number of iterations is reached.

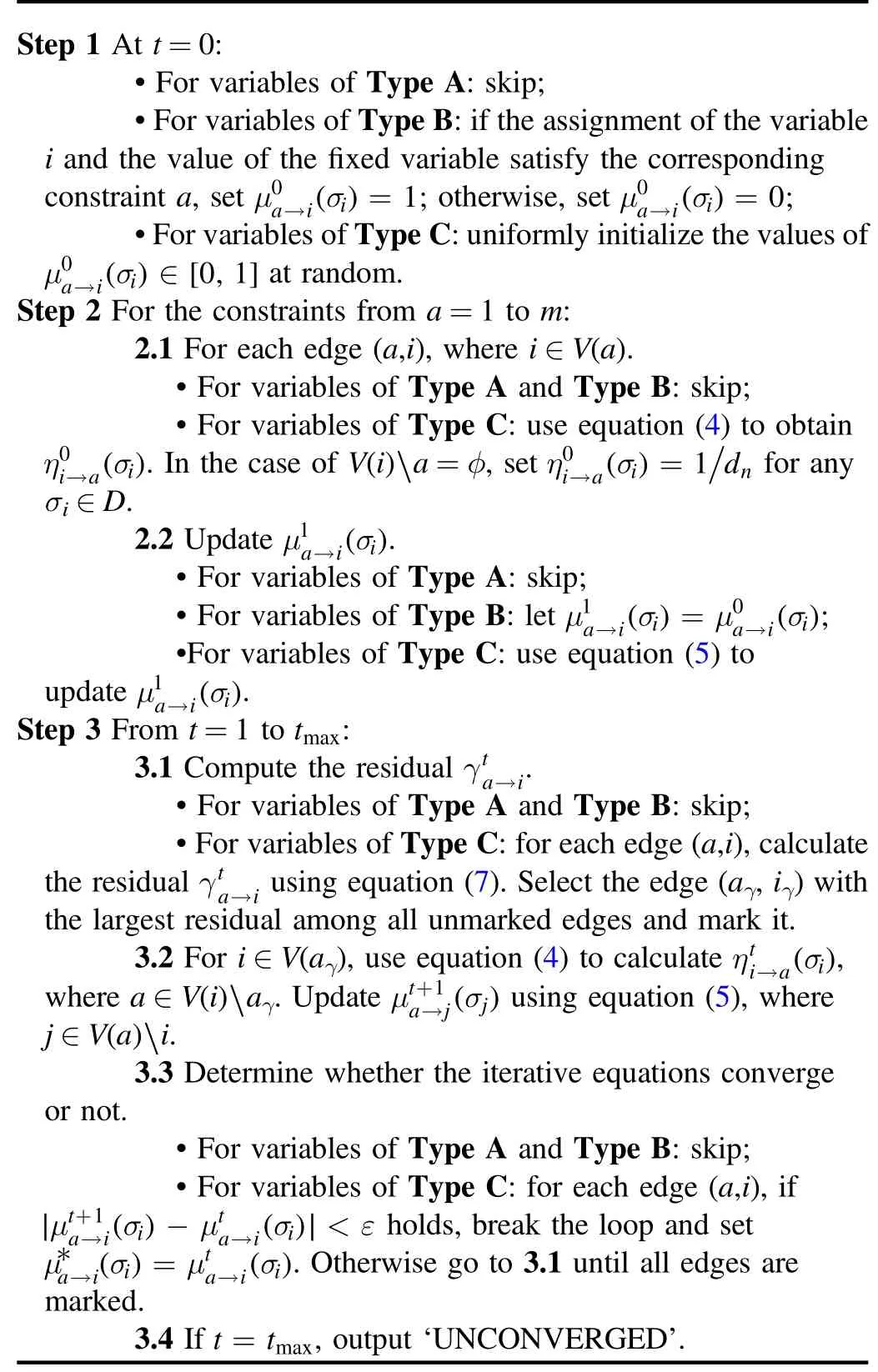

During the decimation process of the BPD algorithm,we iteratively fix variables with values until we have an assignment for all variables.Once a variable in a factor graph is assigned a certain value, variables in the factor graph are divided into three different types, as illustrated in figure 3.

Figure 2.Local procedure of message updating based on maximal residuals on a factor graph.The edge(aγ,iγ)with the largest residual is selected for updating and then marked with 0, and the numbers 1 and 2 represent the order of message updating.

Figure 3.In the decimation process of the BPD algorithm,we classify the variables into three different types, indicated by A, B and C.

· Type A:The variables that have been assigned with some values.

· Type B:The variables not assigned with values, yet connected to the same constraints as the assigned ones.

· Type C:Others.

The MRBP algorithm is detailed in the following pseudocode.

(Continued.)

MRBP algorithm

The subroutine MRBP-UPDATE is to update the messages on edges of a factor graph until a fixed point or a maximal number of iteration steps.

Subroutine MRBP-UPDATE:

The subroutine MRBP-UPDATE*is to obtain the fixed point of message updating in the decimation process, during which some variables have already been assigned with certain values.

Subroutine MRBP-UPDATE*:

4.Results

Here we test our MRBP algorithm based on residuals and show that it not only reduces the fluctuation of the messages,but also significantly improves the convergence of the BP iterative equations.Hence, it can effectively construct a solution of a random instance generated by model RB when approaching the satisfiability threshold.

As a representative parameter set to generate random instances of binary model RB,we take α=0.8 and r=3.We should mention that,in random instances with other values of parameters of α and r, we can obtain similar results on the algorithmic performance around thresholds.In Table 1, for different problem sizes n, corresponding quantities and thresholds are shown.In the MRBP algorithm, we taketmax= 400and ε=10-4.

Figure 4.Fraction of satisfiable instances as a function of p obtained by the MRBP algorithm in solving 50 random instances generated by model RB.

As the first part of our results, we consider the performance of the MRBP algorithm.The fraction of successful runs over 50 random instances, which are generated by binary model RB for n ∈{20, 40, 60, 80}, is shown in figure 4.It is observed that almost all instances are satisfiable when the constraint tightness p <0.19.The MRBP algorithm can construct a solution efficiently when p <0.20.However, the algorithm fails with probability tending to 1 when p >0.22.Therefore, the algorithm shows a good performance when p approaches the theoretical satisfiability threshold ps⋍0.23.Unfortunately, the algorithm fails in the extreme hard region,which may be closely related to the structural transition of the solution space.It is worth noting that the performance of the MRBP algorithm is almost independent of the problem size n.

In the decimation process of the MRBP algorithm, we measure the entropy of the selected variable at each time step T for different problem sizes n.We define the entropy of the decimated variable at T as

In figure 5, the results of the entropy in function of T/n for p=0.1 and n={20, 40} are presented.For a given n,the entropy curve shows a concave shape with a minimum at T/n ≈0.5, where half of the variables are fixed with certain values.For n=20 and 40, the entropyis always less than the maximum in the case in which each variable is evaluated with equal probability from its domain,which guarantees that the MRBP algorithm can select a polarized variable among the remaining free variables to fix.

We then consider the mean degree of freedom of the n variables in the decimation procedure of the BPD algorithm,which is defined as

In figure 6, the relationship between the mean degree of freedom s(p)and the constraint tightness p for n=40 is reported.Itis shown that, with an increase in constraint tightness p, s(p)monotonously decreases to 0.Therefore, we suspect that as p increases, some variables are frozen at certain assignments,which prevents the MRBP algorithm from escaping from the locally optimal solution.Further improving the performance of the algorithm involves studying in more detail the structural evolution of the solution space of model RB.

Table 1.For different problem sizes n, the domain size dn, the constraint number m, and the theoretical satisfiability threshold ps obtained by Theorem 1 are shown correspondingly.

Figure 5.EntropyS ()of the decimated variables at each step T in the MRBP algorithm for binary model RB as a function of T/n at p = 0.1.

As the second part of our results, we present a comparison between the usual BP algorithm(algorithm 3 in[39])and the MRBP algorithm.Figure 7 shows their performance comparison in terms of message convergence and solving power.It can be seen from figure 7 (a), for most instances,that if the BP algorithm converges, it can usually construct a solution of the instance.However, this is not the case for the MRBP algorithm.As shown in figure 7(b),when p <0.21,if the MRBP algorithm converges, it almost always finds the solution of the instance.However, when p ≥0.21, the assignment obtained after the algorithm has converged may not be the solution of the instance.In other words, compared with algorithm 3 in [39], although the convergence of the MRBP algorithm has been greatly improved, the constructed assignment may not be a solution of the instance.As we can see from figure 7(c),the convergence of the MRBP algorithm is significantly better than algorithm 3 in[39].For the MRBP algorithm, the number of convergent instances decreases first and then increases with an increase in p.Moreover, the convergence is the worst at p=0.21,where the probability of the MRBP algorithm finding a solution of a random instance is extremely low.In figure 7(d),the MRBP algorithm shows a consistently higher success probability in finding groundstate solutions.It is obvious that the MRBP algorithm not only improves the convergence of BP iterative equations but also greatly improves the efficiency of finding solutions.

Figure 6.Mean degree of freedom s(p)on n variables in function of p during the execution of MRBP algorithm on a random instance generated by binary model RB for n=40.

Figure 7.(a) Number of convergent instances and number of instances for which a solution can be found by algorithm 3 in [39]when solving 50 random instances of binary model RB for n=40.(b)Results of the same content with(a)yet obtained from the MRBP algorithm.(c) and (d) Comparison of the two algorithms on the number of convergent instances and the number of successful instances, respectively.

We also compare the solving efficiency of the MRBP algorithm with three related algorithms based on BP(i.e.ABP algorithm with γ=0.5, SBP algorithm with γ=0.5, and VABP algorithm)in[41]and algorithm 3 in[39].The results of 50 random instances generated by binary model RB for n=40 are shown in figure 8, which illustrate that the MPBP algorithm can find a solution of the problem with a high probability when approaching the satisfiability phase transition region.

Figure 8.Comparison of the success probability between the MRBP algorithm,ABP algorithm with γ=0.5,SBP algorithm with γ=0.5,VABP algorithm in [41], and algorithm 3 in [39].We consider here n=40.

Figure 10.Running time of the MRBP algorithm as a function of p.We consider here n=20.

Figure 9.Comparison of the average number of iterations required by the MRBP algorithm, ABP algorithm with γ=0.5, SBP algorithm with γ=0.5,VABP algorithm in[41],and algorithm 3 in[39] at each decimated step T.We consider here n=60 and p = 0.15.

Furthermore,from the perspective of computational cost,we compare the average number of iterations required at each time step for the decimated variable if the BP equations converge.In figure 9, the diagram of average iterations is shown for n=60 at p=0.15, where the five algorithms can construct a solution of a random instance with probability 1.We can see that the number of iterations required by the MRBP algorithm in the early decimation process is much lower than that of the ABP algorithm with γ=0.5, SBP algorithm with γ=0.5, VABP algorithm in [41], and algorithm 3 in[39].After approximately 90%of the variables are fixed, the iterations required by the five algorithms are drastically reduced.Therefore,compared with other novel related algorithms, the MRBP algorithm greatly improves the convergence of message passing algorithms.

Finally, we analyze the time complexity of the MRBP algorithm.The typical time complexity of BP algorithms is O(M)as M is the size of the edges in a graph instance.In our modification on BP algorithms, there are an extra O(1) message updating steps after each iteration of messages on all edges.Thus the complexity of the running time scales asO(n2lnn), in whichn2lnnis simply the size of the edges in model RB here.In figure 10,we show the running time of the MRBP algorithm as a function of p for n=20.We can see that the running time increases sharply when p is close to the satisfiability threshold, a common phenomenon for search algorithms approaching thresholds in CSPs.

Summing the above results together, for the problem of model RB, the MRBP algorithm greatly improves the convergence of BP iterative equations and shows significantly higher probabilities in finding solutions approaching the satisfiability threshold with a large reduction of iterations in computational cost.

5.Conclusion

In this paper, we propose an improved message passing algorithm based on residuals of messages to solve CSPs.The residual of messages is to quantify the fluctuation of messages as a time series between two consecutive time steps.As a modification to the usual message updating process, those messages with maximal residuals are given higher priority in the updating process.We test our MRBP algorithm on model RB, a random CSP with growing domains, which has an exact satisfiability threshold by previous analytical study.Numerical results show that our algorithm outperforms the BP algorithm with a better convergence of message updating,a higher probability in constructing a solution for the problem, and a lower computational cost.

To explore the potential implication of residuals on optimization problems and message passing algorithms, several lines of research can be carried out as future work.The first one is to combine our algorithm with a detailed description of the landscape of hard optimization problems to develop better problem-solving algorithms.The second one is to extend our algorithm to combinatorial optimization and CSPs with fixed domains,such as the minimum vertex cover problem and the k-SAT problem, which have a more complex and yet a more refined picture of solution space structure of ground-state configurations.At the same time,the idea of residuals of messages can be introduced into message passing algorithms in contexts other than sparse graphs, such as the approximated message passing algorithms on dense graphs,cluster variational message passing algorithms on lattice structures, and so on.

Acknowledgments

J.-H.Zhao is supported by Guangdong Major Project of Basic and Applied Basic Research No.2020B0301030008,Science and Technology Program of Guangzhou No.2 019 050 001,the Chinese Academy of Sciences Grant QYZDJ-SSWSYS018, and the National Natural Science Foundation of China(Grant No.12 171 479).C.-Y.Zhao is supported by the National Natural Science Foundation of China (Grant Nos.11301339 and 11491240108).

Communications in Theoretical Physics2022年3期

Communications in Theoretical Physics2022年3期

- Communications in Theoretical Physics的其它文章

- Dynamics of mixed lump-soliton for an extended (2+1)-dimensional asymmetric Nizhnik-Novikov-Veselov equation

- Representation of the coherent state for a beam splitter operator and its applications

- Entanglement witnesses of four-qubit tripartite separable quantum states*

- From wave-particle duality to wave-particlemixedness triality: an uncertainty approach

- Photon subtraction-based continuousvariable measurement-device-independent quantum key distribution with discrete modulation over a fiber-to-water channel

- Enhancement of feasibility of macroscopic quantum superposition state with the quantum Rabi-Stark model